Duplicate records in Dataverse are a pain. Duplicate detection rules can help, but only if your only source of data is people entering it through the power apps forms. Duplicate detection rules don’t seem to care about API entries or bulk imports.

Obviously it’s best to handle duplicate detection in your application if you’ve got some sort of custom API, but in high-volume situations it’s not always possible – i.e. if you manage to query the database for a duplicate before the database has finished adding another record that would become a duplicate. (race condition?)

Here’s how I manage duplicate records for a custom integration – it’s not perfect, and it’s not efficient, but it works. If you’re handling more than about 1000 records per run I’d suggest looking at using the batch API instead of this method – blog post here.

How this duplicate detection flow works

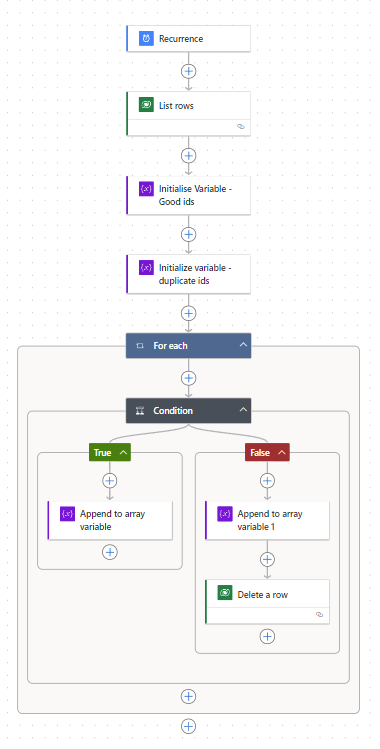

The flow runs every night, at an off-peak time and:

- Lists rows from the table I want to detect duplicates for. I have defined an ODate filter so that I’m only handling about 400 rows at a time.

duedate ge 'formatDateTime(utcNow(), 'yyyy-MM-dd')'

This filters for only the rows with a due date in the future (your filters will need to be different to suit your needs!!) - I’ve chosen to make an array for the unique IDs, and for the ones I’m deleting. The unique IDs array is necessary, but the bad IDs one is optional.

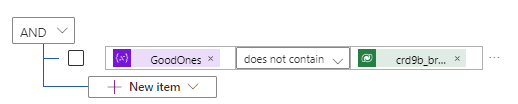

- Add a Condition (If) block and add a condition for if the unique array contains [The column that you want to check for duplicates on]. In my case, I have an ID column that is based on an external application ID. Power Automate should make a foreach loop around your condition.

- If the condition is true (the current ID is not in the GoodOnes list), we should add it to the GoodOnes list with the Append to array variable action.

- If the condition is false (the current ID is a duplicate), we should delete it.

Warning: running this flow might delete stuff you don’t want deleted!

Once the flow has run once, it should be quicker to run the following times as there shouldn’t be as many duplicates. This is a very simplistic solution, and better options do exist!

Leave a Reply